lauren gold, PhD

About

I am currently a postdoc at Rice University, where I focus on real-time rendering systems research, developing immersive data visualization applications and multi-user XR experiences. My work has direct applications for NASA Mission Operations, particularly in planetary terrain visualization for rover path planning and geological analysis. I bridge the gap between technical execution and scientific inquiry by leveraging my strengths in data processing, real-time rendering, AR/VR software development, and immersive user experience design.

Research PROJECTS

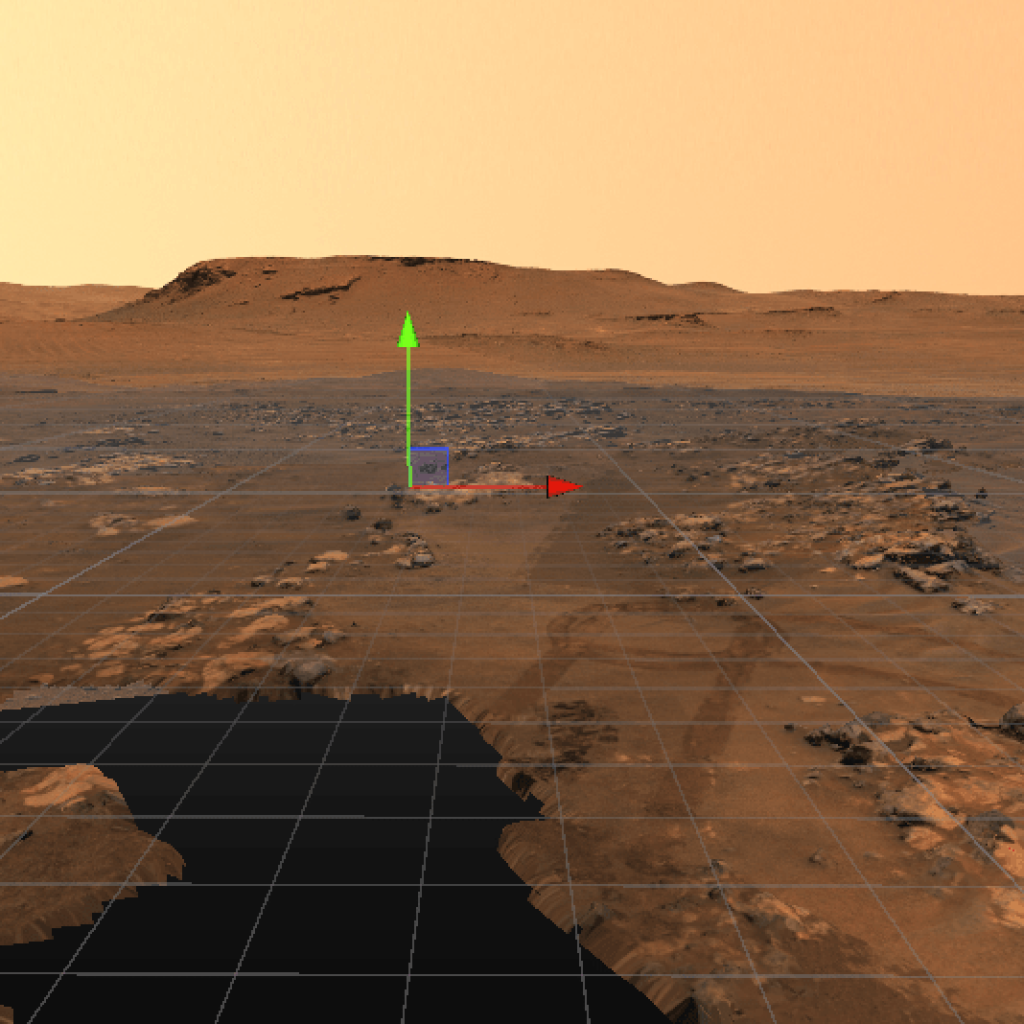

Real-time uncertainty vis

This project explores reprojection error through real-time rendering of point clouds of the martian terrain. The input data is from the Mastcam-Z onboard NASA’s Perseverance Rover. The 3D position of the points are derived from JPL’s .XYZ products which are a result of multi-view stereo processing. The visual enables researchers to gain an understanding of error patterns and locate potential imprecision in camera calibration.

Planetary Parfait

Planetary Parfait is a system that enables scientists to collaboratively explore planetary datasets and their many data layers across VR, AR, and desktop platforms. By integrating interactive 3D visualization into scientific workflows, it bridges the gap between traditional 2D tools and modern collaborative analysis.

Common Ground

Common Ground explores cross-virtual interactions to improve communication and group awareness in multiscale data analysis through scale-adaptive awareness cues.

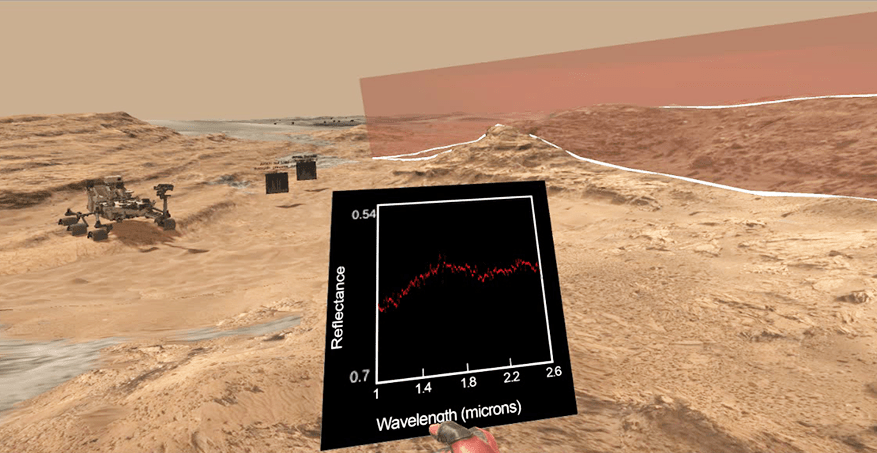

visualizing orbital spectroscopy through onsite rendering

Planetary Visor is a virtual reality tool to visualize orbital and rover-based datasets from the ongoing traverse of the NASA Curiosity rover in Gale Crater. Data from orbital spectrometers provide insight about the composition of planetary terrains. Meanwhile, Curiosity rover data provide fine-scaled localized information about Martian geology. By visualizing the intersection of the orbiting instrument’s field of view with the rover-scale topography, and providing interactive navigation controls, Visor constitutes a platform for users to intuitively understand the scale and context of the Martian geologic data under scientific investigation.

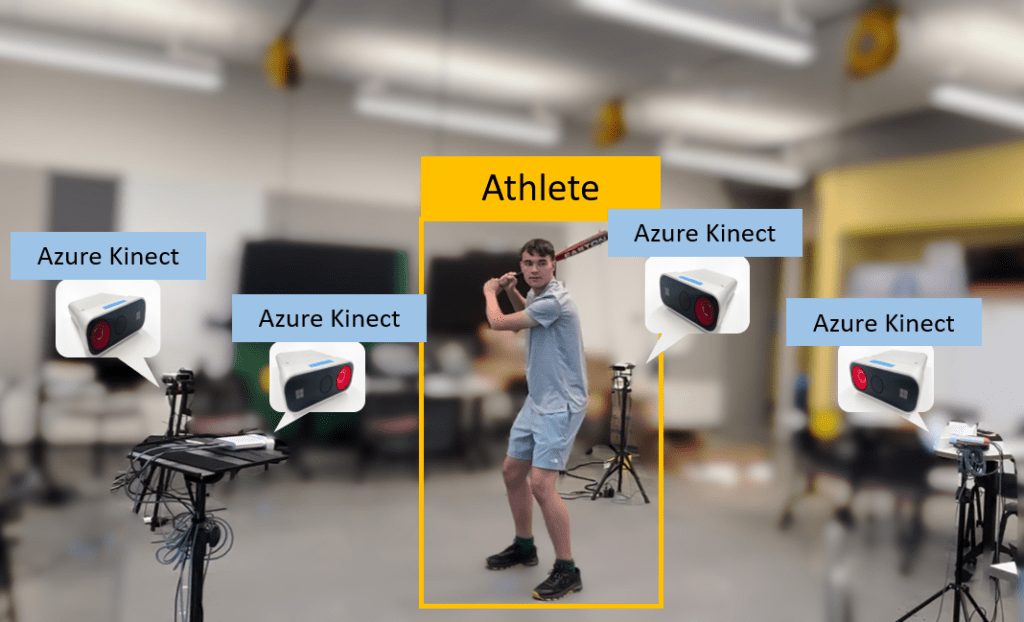

Augmented Coach

Augmented Coach uses volumetric data to create 3D point cloud videos of athletes, enabling spatial movement analysis with immersive annotation tools. Designed to enhance remote coaching across various sports, the system improves feedback and performance evaluation, as demonstrated in studies with certified coaches and athletes.

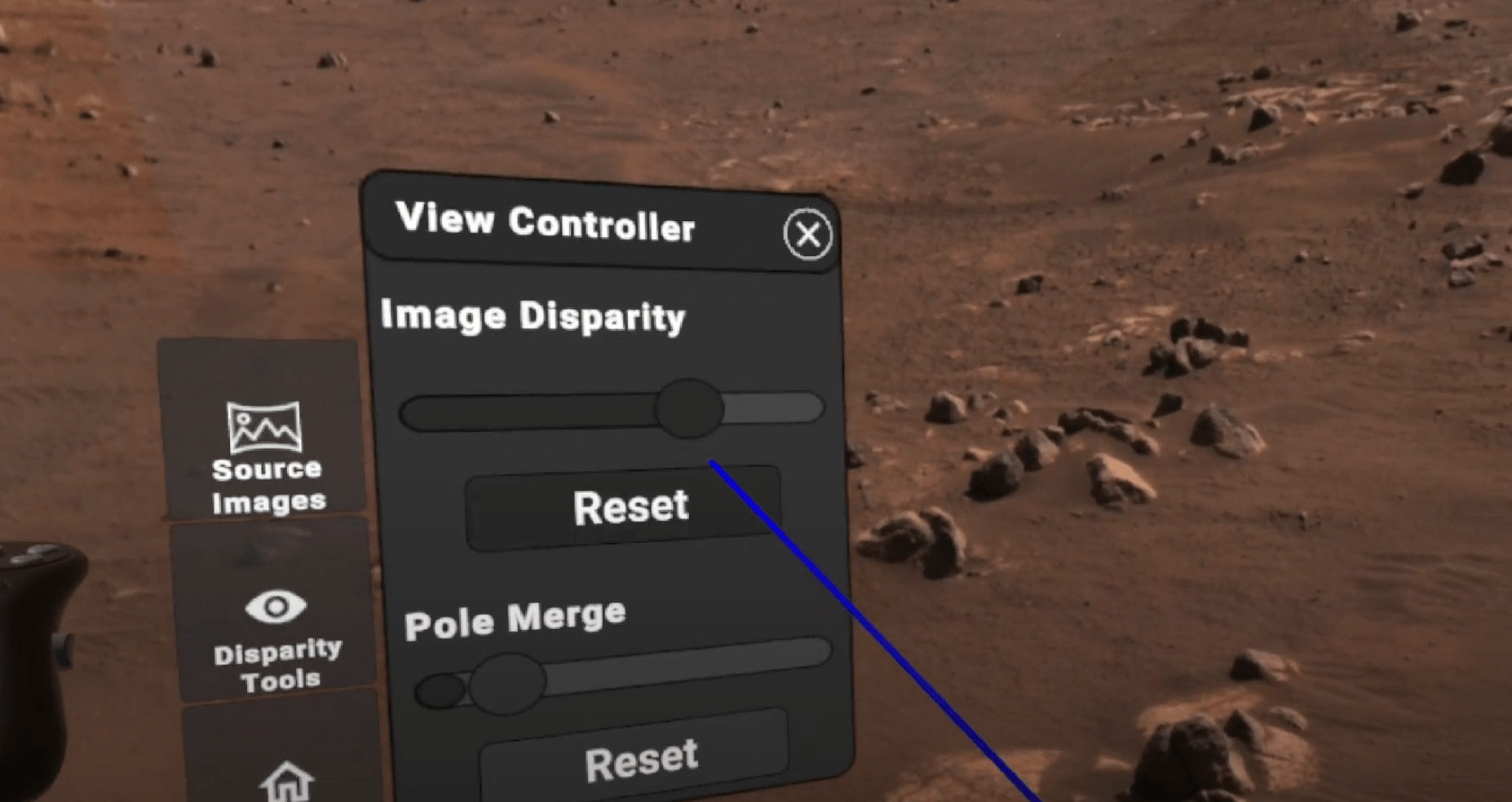

VR IMAGE BROWSING EXTENSION

The VR Image Browsing Extension (VIBE) is a VR stereoscopic panorama viewer for the Meta Quest 2, enhancing situational awareness for Mars rover path planning and science target evaluation. The viewer offers immersive, high-resolution 3D environments with intuitive UI/UX, automated scene generation, and data overlays to support terrain hazard assessment.

NEWS & VISITS

- Attended the Lunar Digital Twins Workshop at JPL (07-2024)

- Hosted the Planetary Terrain Building Workshop in collaboration with JPL, Part 1 at ASU (02-2023) and Part 2 at JPL (04-2024)

- MobiSys ‘22 Best Demo Award (06-2022)